Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

With all the recent talk about AI, data centers, and the cloud, we think it is helpful to remember that processors like GPUs and CPUs only make about 20% of the upfront cost of a server. These tend to get the most focus as the choice of processor has to be made first, and it is this decision that drives the options for everything else in the server, but they are only a piece of the total cost.

Memory is another 20%. But still well over half the cost of a server comes from much more prosaic products – printed circuit boards (PCBs), passive components, cables, power supplies, hard drives and the racks that hold them – we should add networking, which can be more than all the rest of the components, but we will save that for another time – who sells all that gear and where does the value accrue?

Editor’s Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

There are two types of vendors here – OEMs and ODMs – we are not going to spell out the acronyms because that actually confuses the picture. Generally speaking – the OEMs own the brands and the end-customer relationships. The ODMs provide sourcing and manufacturing – the physical production and assembly of all the gear. In between the two, there is considerable overlap in areas of design and systems integration. It is important to keep in mind that the boundaries between OEMs and ODMs is fuzzy, with significant back and forth in many areas.

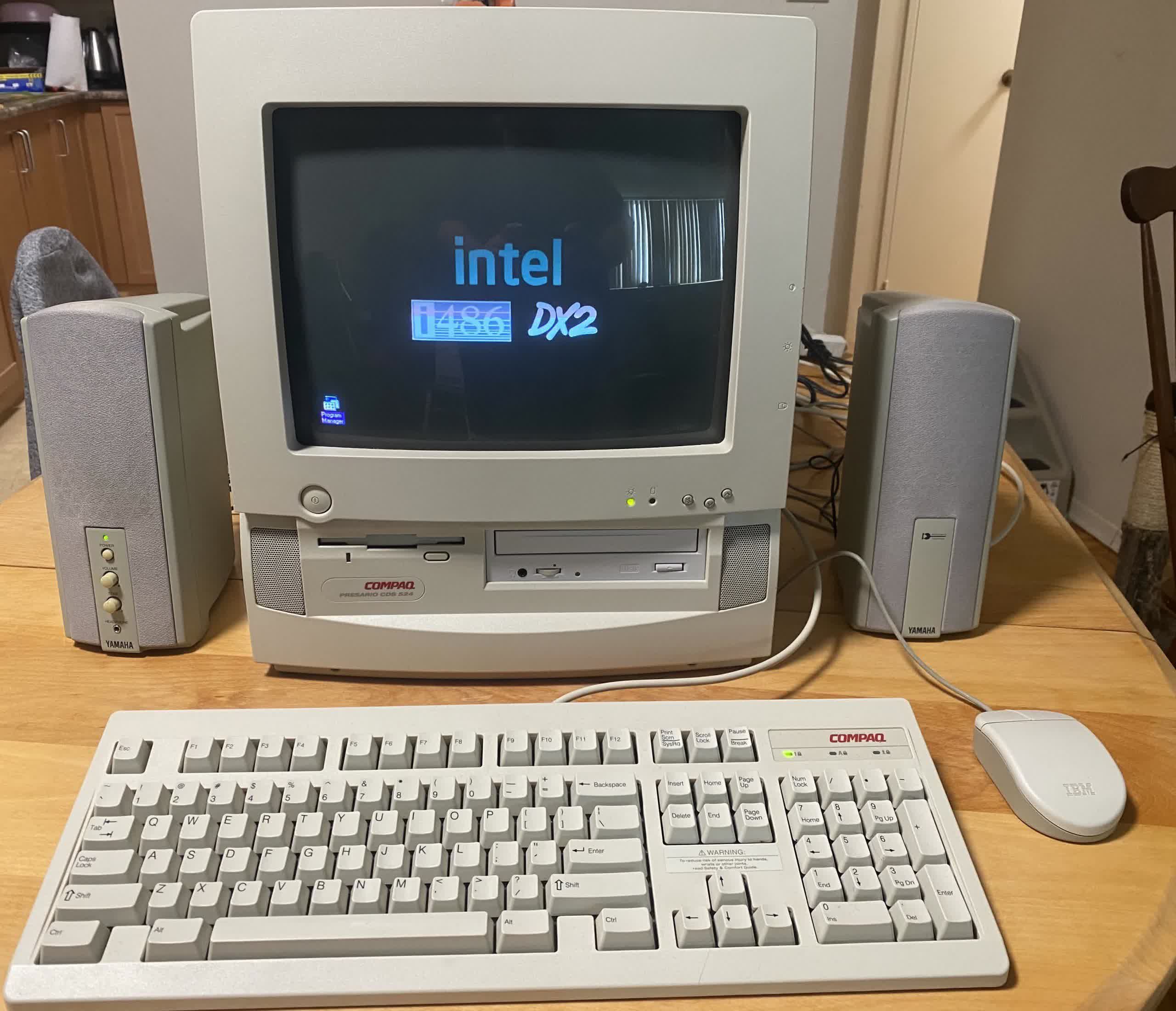

A bit of history. This model came to life during the 1990’s as PC makers moved manufacturing from the United States to Asia. The PC brands, the OEMs, outsourced to contract manufacturers largely based in Taiwan. Those companies manufactured gear in Taiwan and later shifted heavily to China. Over time, the contract manufacturers moved up the value chain, adding design capabilities. The contract manufacturers became ODMs and then many of them spun off separate companies to sell their own branded products becoming OEMs in their own right. This model then percolated into how most high-volume electronics are produced today.

Servers moved at a slightly different pace. These offered lower volume and higher prices, so the OEMs, the brand owners, held onto design functions (and sometimes manufacturing) for much longer. For many years, the OEMs worked with Intel to design a range of servers. They then sold these to customers. While they offered various configurations, these were largely catalog systems – customers picked from the options available.

The cloud changed all of this.

Most critically, the public cloud providers (a.k.a. the hyperscalers) came to dominate the market, not only concentrating economic power but also technical competence. Over time, the hyperscalers largely cut out the OEMs, working directly with the ODMs to source the systems they designed themselves.

Today, the OEM landscape largely consists of HP, Dell and Lenovo. There are hundreds of ODMs, but the largest are all based in Taiwan and include Compal, Foxconn, Inventec, Quanta and Wistron. The companies are all very diverse with dozens of subsidiaries spread out across the supply chain. There are also a handful of other ODMs which tend to specialize in specific niches, such as the meme stock of the moment SuperMicro with their specialty in GPU servers.

How does this work in practice? Today there is a divide between the hyperscalers and essentially everyone else. Imagine a large corporation – a bank, a fast food chain, or an automaker – they may still want to own their own servers, or even data centers. They will work with the OEMs, who will offer them a catalog of systems to choose from. The OEMs will then typically act as the systems integrator – working with all the vendors to source parts, assemble the PCBs, then wire everything to together and install the software. The OEMs play an important role here as they are the ones making a lot of the purchase decisions.

By contrast, the hyperscalers operate dozens of data centers. Their business is based on massive economies of scale and if they can shave off 5% of the cost of a server that leads to hundreds of millions of dollars in savings. On top of that they have concentrated technical talent. Put simply, they can afford to hire teams to design servers optimized for their specific needs. Other large corporations do not have those teams, nor do they really need them, they are just not operating at the same scale. The hyperscalers then go directly to the ODMs who collect all the components, assemble the systems and wire them up. Here, it is the end customer who is making purchase decisions for almost all of the components.

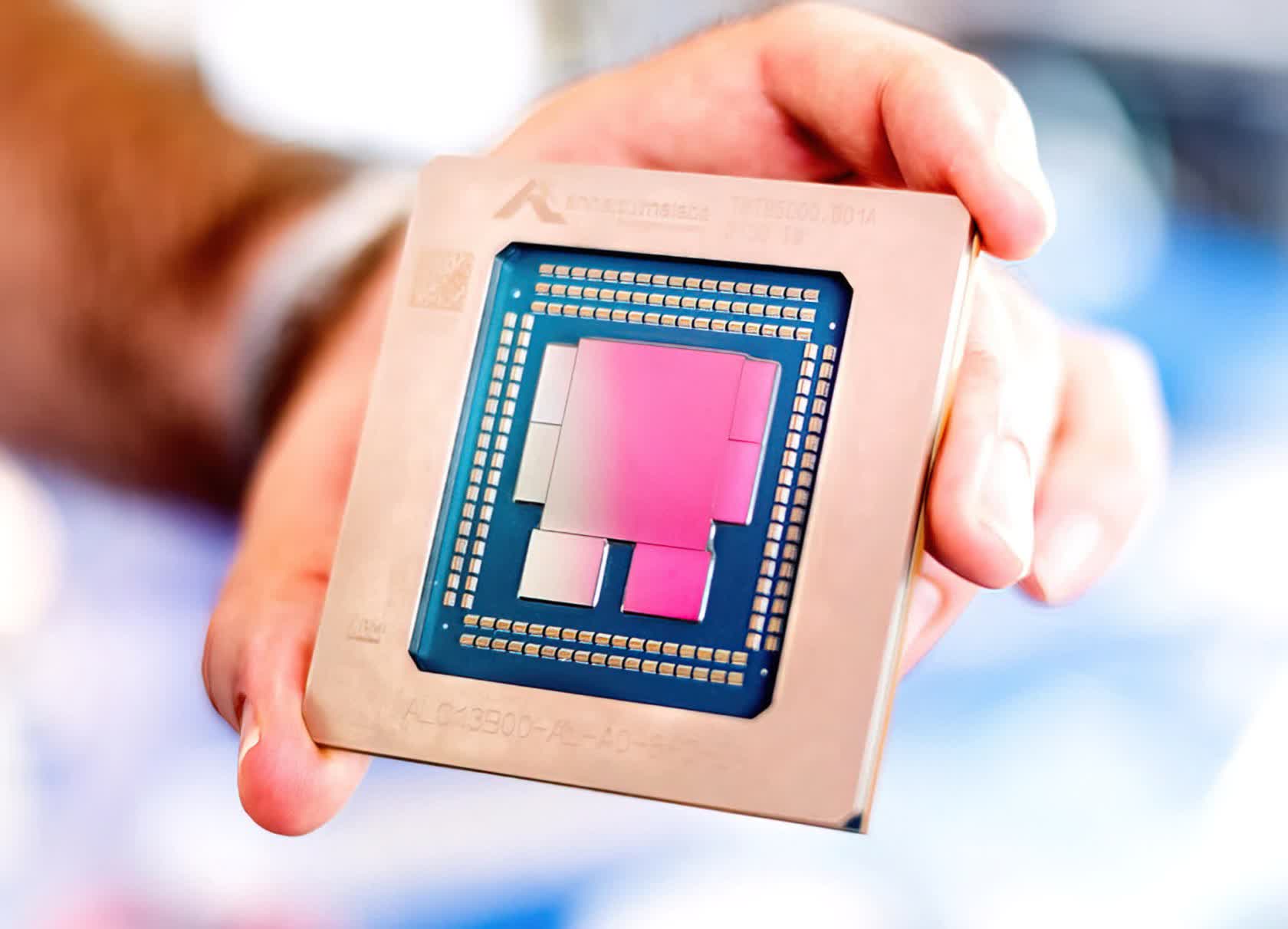

This presents a big problem for all the component vendors. Imagine a chip vendor. They need to convince a customer to buy their chip, but the customer does not want a chip, they want a complete working system. Before they agree to any large orders, the customer will want to test out that system and make sure it runs their software well. So the chip vendor has to work with an OEM or ODM to design that system. And these designs costs money. It takes a team of 5-10 people a month or two to lay everything out, verify performance, and ensure firmware and software compatibility. Then someone has to buy components to build a few prototypes.

These costs add up quickly, easily a few hundred thousand per system and often into seven figures. So before the chip vendor can sell a single chip, they have to invest material amounts. Customers all want servers that are as close to their needs as possible, this means someone has to produce multiple versions of the server, and so the costs can balloon. All before anyone knows how well the platform sell.

This problem has gotten worse. When it was only Intel and AMD selling server CPUs, the supply chain had a constrained decision space, with well established providers. Now that there are a dozen CPU designers the combinatorics are much more daunting. Anyone looking to enter the market for AI accelerators has to contend with all these costs. And for smaller vendors, they have to be very careful how they place their bets.

Invest in support for a hot chip and the rewards can be immense, but invest in the wrong platforms and the returns are big losses. The problem is even more acute when it comes to selling to the hyperscalers. They want a lot more than a few prototypes. They have rigorous testing cycles which move from a dozen systems, to a hundred to a few thousand. They may pay for those (or not), but any company designing a chip needs a lot more volume than that to justify the costs of the test systems let alone the cost of the entire chip.

Of course, there are all sorts of initiatives to standardize much of this. The Open Compute Project’s core mission is to standardize the design of servers. And while OCP has made some major contributions to the industry, we do not think anyone would describe it as a common standard. All of this is going to get more complex.

The growing diversification of data centers, from CPU-only, to heterogeneous compute is forcing all the vendors – not just the chip designers – to starting taking on some heavy risk. Many will chase every deal, others will probably fall back on old habits focusing on AMD and Intel and now Nvidia. The smart ones will take a portfolio approach to their business and monitor their choices in ways that resemble hedge fund managers or venture investors. We do not intend to be alarmist, much of this is a natural part of electronics cyclicality. Over time, the industry will find some new equilibrium, but the next few years are going to be much more chaotic.

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : TechSpot – https://www.techspot.com/news/102689-bending-metal-changing-server-business.html