A version of this post originally appeared on Tedium, Ernie Smith’s newsletter, which hunts for the end of the long tail.

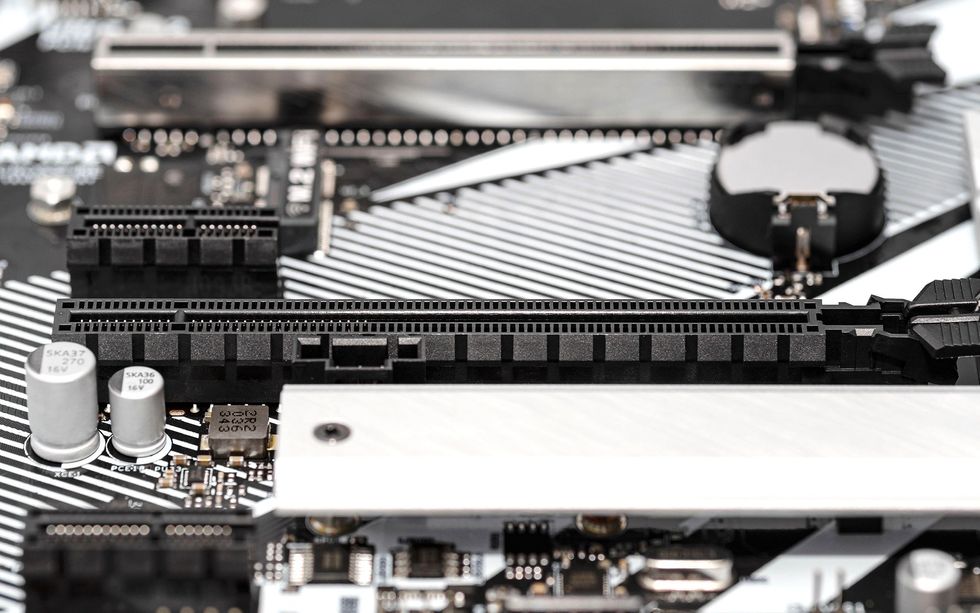

Personal computing has changed a lot in the past four decades, and one of the biggest changes, perhaps the most unheralded, comes down to compatibility. These days, you generally can’t fry a computer by plugging in a joystick that the computer doesn’t support. Simply put, standardization slowly fixed this. One of the best examples of a bedrock standard is the peripheral component interconnect, or PCI, which came about in the early 1990s and appeared in some of the decade’s earliest consumer machines three decades ago this year. To this day, PCI slots are used to connect network cards, sound cards, disc controllers, and other peripherals to computer motherboards via a bus that carries data and control signals. PCI’s lessons gradually shaped other standards, like USB, and ultimately made computers less frustrating. So how did we get it? Through a moment of canny deception.

Commercial – Intel Inside Pentium Processor (1994)www.youtube.com

Embracing standards: the computing industry’s gift to itself

In the 1980s, when you used the likes of an Apple II or a Commodore 64 or an MS-DOS machine, you were essentially locked into an ecosystem. Floppy disks often weren’t compatible. The peripherals didn’t work across platforms. If you wanted to sell hardware in the 1980s, you were stuck building multiple versions of the same device.

For example, the KoalaPad was a common drawing tool sold in the early 1980s for numerous platforms, including the Atari 800, the Apple II, the TRS-80, the Commodore 64, and the IBM PC. It was essentially the same device on every platform, and yet, KoalaPad’s manufacturer, Koala Technologies, had to make five different versions of this device, with five different manufacturing processes, five different connectors, five different software packages, and a lot of overhead. It was wasteful, made being a hardware manufacturer more costly, and added to consumer confusion.

Drawing on a 1983 KoalaPad (Apple IIe)www.youtube.com

This slowly began to change in around 1982, when the market of IBM PC clones started taking off. It was a happy accident—IBM’s decision to use a bunch of off-the-shelf components for its PC accidentally turned them into a de facto standard. Gradually, it became harder for computing platforms to become islands unto themselves. Even when IBM itself tried and failed to sell the computing world on a bunch of proprietary standards in its PS/2 line, it didn’t work. The cat was already out of the bag. It was too late.

So how did we end up with the standards that we have today, and the PCI expansion card standard specifically? PCI wasn’t the only game in town—you could argue, for example, that if things played out differently, we’d all be using NuBus or Micro Channel architecture. But it was a standard seemingly for the long haul, far beyond other competing standards of its era.

Who’s responsible for spearheading this standard? Intel. While PCI was a cross-platform technology, it proved to be an important strategy for the chipmaker to consolidate its power over the PC market at a time when IBM had taken its foot off the gas, choosing to focus on its own PowerPC architecture and narrower plays like the ThinkPad instead, and was no longer shaping the architecture of the PC.

The vision of PCI was simple: an interconnect standard that was not intended to be limited to one line of processors or one bus. But don’t mistake standardization for cooperation. PCI was a chess piece—a part of a different game than the one PC manufacturers were playing.

In the early 1990s, Intel needed a win

In the years before Intel’s Pentium chipset came out in 1993, there seemed to be some skepticism about whether Intel could maintain its status at the forefront of the desktop-computing field.

In lower-end consumer machines, players like Advanced Micro Devices (AMD) andCyrix were starting to shake their weight around. At the high end of the professional market, workstation-level computing from the likes of Sun Microsystems, Silicon Graphics, and Digital Equipment Corporation suggested there wasn’t room for Intel in the long run. And laterally, the company suddenly found itself competing with a triple threat of IBM, Motorola, and Apple, whose PowerPC chip was about to hit the market.

A Bloomberg piece from the period painted Intel as being boxed in between these various extremes:

If its rivals keep gaining, Intel could eventually lose ground all around.

This is no idle threat. Cyrix Corp. and Chips & Technologies Inc. have re-created—and improved—Intel’s 386 without, they say, violating copyrights or patents. AMD has at least temporarily won the right in court to make 386 clones under a licensing deal that Intel canceled in 1985. In the past 12 months, AMD has won 40% of a market that since 1985 has given Intel $2 billion in profits and a $2.3 billion cash hoard. The 486 may suffer next. Intel has been cutting its prices faster than for any new chip in its history. And in mid-May, it chopped 50% more from one model after Cyrix announced a chip with some similar features. Although the average price of a 486 is still four times that of a 386, analysts say Intel’s profits may grow less than 5% this year, to about $850 million.

Intel’s chips face another challenge, too. Ebbing demand for personal computers has slowed innovation in advanced PCs. This has left a gap at the top—and most profitable—end of the desktop market that Sun, Hewlett-Packard Co., and other makers of powerful workstations are working to fill. Thanks to microprocessors based on a technology known as RISC, or reduced instruction-set computing, workstations have dazzling graphics and more oomph—handy for doing complex tasks and moving data faster over networks. And some are as cheap as high-end PCs. So the workstation makers are now making inroads among such PC buyers as stock traders, banks, and airlines.

This was a deep underestimation of Intel’s market position, it turned out. The company was actually well-positioned to shape the direction of the industry through standardization. They had a direct say on what appeared on the motherboards of millions of computers, and that gave them impressive power to wield. If Intel didn’t want to support a given standard, that standard would likely be dead in the water.

How Intel crushed a standards body on the way to giving us an essential technology

The Video Electronics Standards Association, or VESA, is perhaps best known today for its mounting system for computer monitors and itsDisplayPort technology. But in the early 1990s, it was working on a video-focused successor to the Industry Standard Architecture (ISA) internal bus, widely used in IBM PC clones.

A bus, the physical wiring that lets a CPU talk to internal and external peripheral devices, is something of a bedrock of computing—and in the wrong setting, a bottleneck. The ISA expansion card slot, which had become a de facto standard in the 1980s, had given the IBM PC clone market something to build against during its first decade. But by the early 1990s, for high-bandwidth applications, particularly video, it was holding back innovation. It just wasn’t fast enough to keep up, even after it had been upgraded from being able to handle 8 bits of data at once to 16.

That’s where the VESA Local Bus (VL-Bus) came into play. Built to work only with video cards, the standard offered a faster connection, and could handle 32 bits of data. It was targeted at the Super VGA standard, which offered higher resolution (up to 1280 x 1024 pixels) and richer colors at a time when Windows was finally starting to take hold in the market. To overcome the limitations of the ISA bus, graphics card and motherboard manufacturers started collaborating on proprietary interfaces, creating an array of incompatible graphics buses. The lack of a consistent experience around Super VGA led to VESA’s formation. The new VESA slot, which extended the existing 16-bit ISA bus with an additional 32-bit video-specific connector, was an attempt to fix that.

It wasn’t a massive leap—more like a stopgap improvement on the way to better graphics.

And it looked like Intel was going to go for the VL-BUS. But there was one problem—Intel actually wasn’t feeling it, and Intel didn’t exactly make that point clear to the companies supporting the VESA standards body until it was too late for them to react.

Intel revealed its hand in an interesting way, according to TheSan Francisco Examinertech reporter Gina Smith:

Until now, virtually everyone expected VESA’s so-called VL-Bus technology to be the standard for building local bus products. But just two weeks before VESA was planning to announce what it came up with, Intel floored the VESA local bus committee by saying it won’t support the technology after all. In a letter sent to VESA local bus committee officials, Intel stated that supporting VESA’s local bus technology “was no longer in Intel’s best interest.” And sources say it went on to suggest that VESA and Intel should work together to minimize the negative press impact that might arise from the decision.

Good luck, Intel. Because now that Intel plans to announce a competing group that includes hardware heavyweights like IBM, Compaq, NCR and DEC, customers and investors (and yes, the press) are going to wonder what in the world is going on.

Not surprisingly, the people who work for VESA are hurt, confused and angry. “It’s a political nightmare. We’re extremely surprised they’re doing this,” said Ron McCabe, chairman for the committee and a product manager at VESA member Tseng Labs. “We’ll still make money and Intel will still make money, but instead of one standard, there will now be two. And it’s the customer who’s going to get hurt in the end.”

But Intel had seen an opportunity to put its imprint on the computing industry. That opportunity came in the form of PCI, a technology that the firm’s Intel Architecture Labs started developing around 1990, two years before the fateful rejection of VESA. Essentially, Intel had been playing both sides on the standards front.

Why PCI

Why make such a hard shift, screwing over a trusted industry standards body out of nowhere? Beyond wanting to put its mark on the standard, Intel also saw an opportunity to build something more future-proof; something that could benefit not just graphic cards but every expansion card in the machine.

As John R. Quinn wrote in PC Magazine in 1992:

Intel’s PCI bus specification requires more work on the part of peripheral chip-makers, but offers several theoretical advantages over the VL-Bus. In the first place, the specification allows up to ten peripherals to work on the PCI bus (including the PCI controller and an optional expansion-bus controller for ISA, EISA, or MCA). It, too, is limited to 33 MHz, but it allows the PCI controller to use a 32-bit or a 64-bit data connection to the CPU.

In addition, the PCI specification allows the CPU to run concurrently with bus-mastering peripherals—a necessary capability for future multimedia tasks. And the Intel approach allows a full burst mode for reads and writes (Intel’s 486 only allows bursts on reads).

Essentially, the PCI architecture is a CPU-to-local bus bridge with FIFO (first in, first out) buffers. Intel calls it an “intermediate” bus because it is designed to uncouple the CPU from the expansion bus while maintaining a 33-MHz 32-bit path to peripheral devices. By taking this approach, the PCI controller makes it possible to queue writes and reads between the CPU and PCI peripherals. In theory, this would enable manufacturers to use a single motherboard design for several generations of CPUs. It also means more sophisticated controller logic is necessary for the PCI interface and peripheral chips.

To put that all another way, VESA came up with a slightly faster bus standard for the next generation of graphics cards, one just fast enough to meet the needs of Intel’s recent i486 microprocessor users. Intel came up with an interface designed to reshape the next decade of computing, one that it would let its competitors use. This bus would allow people to upgrade their processor across generations without needing to upgrade their motherboard. Intel brought a gun to a knife fight, and it made the whole debate about VL-Bus seem insignificant in short order.

The result was that, no matter how miffed the VESA folks were, Intel had consolidated power for itself by creating an open standard that would eventually win the next generation of computers. Sure, Intel let other companies use the PCI standard, even companies like Apple that weren’t directly doing business with Intel on the CPU side. But Intel, by pushing forth PCI, suddenly made itself relevant to the entire next generation of the computing industry in a way that ensured it would have a second foothold in hardware. The “Intel Inside” marketing label was not limited to the processors, as it turned out.

The influence of Intel’s introduction of PCI is still felt: Thirty-two years later, and three decades after PCI became a major consumer standard, we’re still using PCI derivatives in modern computing devices.

PCI and other standards

Looking at PCI, and its successor PCI express, less as ways that we connect the peripherals we use with our computers, and more as a way for Intel to maintain its dominance over the PC industry, highlights something fascinating about standardization.

It turns out that perhaps Intel’s greatest investment in computing in the 1990s was not the Pentium chipset, but its investment in Intel Architecture Labs, which quietly made the entire computing industry better by working on the things that frustrated consumers and manufacturers alike.

Essentially, as IBM had begun to take its eye off the massive clone market it unwittingly built during this period, Intel used standardization to fill the power void. It worked pretty well, and made the company integral to computer hardware beyond the CPU. In fact, devices you use daily—that Intel played zero part in creating—have benefited greatly from the company’s standards work. If you’ve ever used a device with a USB or Bluetooth connection, you can thank Intel for that.

Craig Kinnie, the director of Intel Architecture Labs in the 1990s, said it best in 1995, upon coming to an agreement with Microsoft on a 3D graphics architecture for the PC platform. “What’s important to us is we move in the same direction,” he said. “We are working on convergent paths now.”

That was about collaborating with Microsoft. But really, it has been Intel’s modus operandi for decades—what’s good for the technology field is good for Intel. Innovations developed or invented by Intel—like Thunderbolt, Ultrabooks, and Next Unit Computers (NUCs)—have done much to shape the way we buy and use computers.

For all the talk of Moore’s Law as a driving factor behind Intel’s success, the true story might be its sheer cat-herding capabilities. The company that builds the standards builds the industry. Even as Intel faces increasing competition from alliterative processing players like ARM, Apple, and AMD, as long as it doesn’t lose sight of the roles standards played in its success, it might just hold on a few years longer.

Ironically, Intel’s standards-driving winning streak, now more than three decades old, might have all started the day it decided to walk out on a standards body.

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : IEEE – https://spectrum.ieee.org/intel-pci-history