Explore some of the sticking points in evolving to a digital operating model and why a clear strategy for a real-time AI platform is a critical part of building AI into applications.

By George Trujillo, Principal Data and AI Strategist, DataStax

Over the past couple months, I’ve met with 60+ executives in closed-room discussions and presented to over 400 attendees in virtual presentations. From these interactions, I’ve narrowed down five challenges that repeatedly come up. Do any of these look familiar in your organization?

- A lack of a unifying vision and leader to drive a digital transformation (a “lack of buy in,” in other words)

- A lack of alignment on the technology stack to execute a strategy for a digital operating model (low collaboration)

- Poor data and machine learning model governance (lack of expertise in offensive strategy to go digital)

- No enterprise alignment on applications, data, artificial intelligence, and analytics (a technology Tower of Babel)

- An inability to overcome complexity and scale challenges in data and process management for real-time AI (change management)

All these challenges are a part of the transformational shift that’s occurring as organizations attempt to move from a traditional operating model to a digital one that provides a foundation for applications leveraging real-time artificial intelligence (AI). AI will drive the biggest platform shift since the internet in the mid-1990s, and determining the right real-time AI technology stack or platform will be integral to strategy execution. Yet most organizations aren’t prepared for the organizational transformation or execution speed needed to support new business opportunities.

Here, I’ll look at some of the sticking points in evolving to a digital operating model and why a clear strategy for a real-time AI platform is a critical part of building AI into applications, as well as offer up some important characteristics of a successful digital operating model. I’ll also share how leveraging AI with large language models (LLMs) and LLM plugins can quickly and easily improve the customer experience in ways that impact the business. This is what business innovators, product managers, e-commerce leaders, and anyone involved in digital engagement are looking for.

Avoiding past mistakes

I’ve spent the last 12 years architecting or leading data initiatives to help organizations drive business value from AI and analytics. I’ve seen too many companies struggle due to losing strategic focus, not getting buy-in, change management challenges, getting lost in shiny new toys, picking disjointed technology stacks, or believing a silver bullet (Hadoop, data lakes, a data mesh, or data fabric) will solve everything.

In today’s world, there’s a north star: the customer and the business are often won in real-time via a digital operating model. An operating model is the blueprint and execution plan for generating value from an organization’s business models. A digital operating model is the defined approach for aligning execution strategy to deliver customer value by leveraging digital capabilities and technologies for business success. It requires a holistic enterprise approach that aligns the data and AI strategies.

Traditional operating models built over the last twenty years just don’t work for meeting today’s business demands. This is where aligning the execution of a real-time AI platform with a digital operating model approach comes in. A real-time AI platform is the software foundation to support the execution of a digital operating model.

What I refer to as traditional operating models are the enterprise technical ecosystems built today by connecting disparate silos one at a time to build today’s analytic and AI ecosystems. Organizations’ technology stacks were often assembled by prioritizing what seemed to be the best technical decision, one project or initiative at a time by different teams with narrowly focused goals. There was rarely a holistic, enterprise-wide architecture view or operating model of how a technology fit into the overall organizational strategy to support business objectives. Traditional operating models were built for analytics and AI downstream in the data warehouse and data lakes. Digital operating models must address the upstream processing of leveraging AI for real-time decisions, actions, and predictions.

The shiny new toy and the demands to deliver on a project often outweigh all other considerations. With big data, for instance, there was the rush to get data into the data lake, and then let the data scientists figure it out from there. The internet created very application-centric environments, where data always took a lower priority. The impacts of these approaches are seen today in poor data architecture, data modeling, data governance, data quality, and data integration—all of which impact the quality of AI.

All the data mistakes made in building out data lakes and the rush to get new mobile apps and chatbots out are being repeated in the rush to build out AI solutions. This reminds me of a quote attributed to psychoanalyst Theodor Reik: “There are recurring cycles, ups and downs, but the course of events is essentially the same, with small variations.It has been said that history repeats itself. This is perhaps not quite correct; it merely rhymes.”

Data is the foundation for AI

Organizations will face challenges in maturing to a digital operating model, especially one designed to scale AI across business units. At the center of a digital operating model will be a real-time data ecosystem that drives business outcomes with AI, as delivering the customer experience in real-time is critical in today’s business world.

The customer and the business are won in real-time in a digital world. A customer’s digital and mobile experience in “real-time” is often valued as highly as the product. But providing this kind of experience, via a digital operating model, requires a new architecture and mindset, along with novel skills, technology, methods, and processes.

AI feeds and lives on data. Data is the foundation of AI, yet only 23% of C-suite executives believe they are data-driven. It’s no longer just a gap that’s growing between organizations succeeding with AI and those that continue to struggle or make slow progress; it’s becoming a fork in the road that separates organizations that will be all in on AI and those that continue struggling to make the transition to AI. The problem for the laggards is the customer will gravitate to companies that provide great experiences in real-time.

Is your organization part of the 71% failure or the 35% success path for digital transformation with data and AI?

• 35% of leaders say they’re on track for digital transformation

• 71% of business decision-makers state their company failed to deliver on the promise of digital transformation

An organization can increase its success by using a proven blueprint or platform.Data feeds AI. Quality and trusted data are the foundation that AI is built on. A successful AI strategy requires a data-centric approach. Data will determine the impact of AI.

Digital operating model

Digital operating models and real-time AI platforms aren’t new. In the past, digital operating models were executed by technical craftspeople and artisans similar to how automobiles were manufactured before the industrial revolution. This required a lot of expertise and resources typically found in FAANG companies that can move fast, or small startups that do not have to carry the technical debt of traditional operating models. Now “platforms” such as real-time AI platforms are industrializing and automating the technologies, processes, and methods to increase the scale and speed of delivering business value with digital transformation driven by real-time AI.

A real-time AI platform

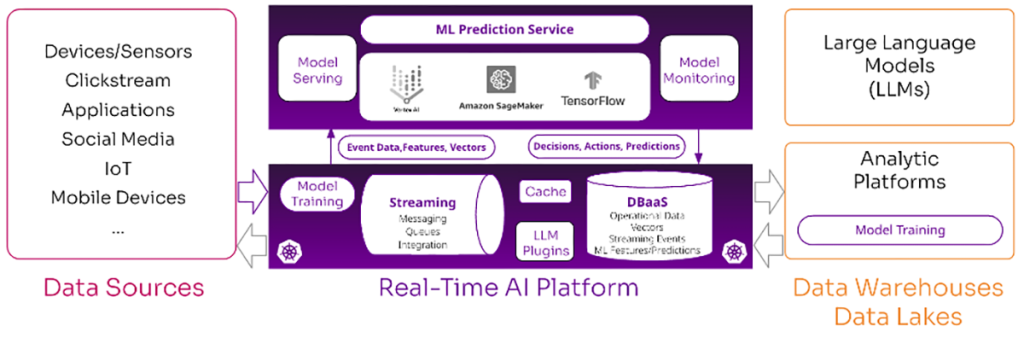

In the CIO article, Building a vision for real-time artificial intelligence, I review the data components of a real-time AI platform. It’s the software, data, and technology that is machine learning-driven with streaming, operational, and feature data for real-time decision-making.

Let’s look at the machine learning side of the real-time AI platform. It’s important to view machine learning (ML) models and engines as software, and, as such, cloud-native capabilities and containers are as important for deploying ML models as they are for deploying microservices. All the advantages of cloud-native capabilities and Kubernetes in terms of reducing complexity, increased resilience, consistency, unit testing, and componentization of services should be leveraged by ML models in a real-time AI platform.

The data streams through which real-time data moves and the databases where real-time data persists are also becoming cloud native. DataOps and MLOps are no longer buzzwords. A common success pattern with digital operating models is the leveraging of Kubernetes as an enterprise strategy.

ML models can be trained in real time or built off-line on analytical platforms. A model is moved into production by being promoted to a model-serving layer, which provides the runtime context for the ML models. The models are then made available through APIs (i.e. REST/gRPC endpoints).

Here is a holistic, high-level view of a modern real-time AI platform:

- A real-time AI platform as the central core of the digital strategy that supports a holistic execution view of the ecosystem

- Data ingestion that aligns messaging, queueing, and streaming across a wide variety of different sources for efficient processing

- A database strategy that supports the integration and processing of day-to-day operational data, vectors (numerical representations of an object or entity that support AI/ML functions), and streaming event data with ML feature and prediction data

- Small data model training using vectors in vector databases for low-latency similarity searches, real-time decisioning, and seamless integration with machine learning workflows

- Large data model training in analytical data sets (in data warehouses, data lakes, and cloud storage)

- A memory cache where sub-second decisions are required

- LLM plugins that make it easy for applications to provide input values and receive output results from LLM data

- A real-time data and AI strategy that feeds analytics platforms that store data in data warehouses, data lakes, and cloud storage environments for building AI models, analytics, and corporate reporting

DataStax

A digital operating model facilitates data flowing easily from one end of a data ecosystem to the other, and back. Integration is where organizations win. A traditional operating model has silos that inhibit data flows across an ecosystem. A strong and resilient enterprise-wide data foundation is necessary to provide the agility for innovation with AI. Real-time decisions require data flow across the entire data ecosystem in both directions.

ML systems encompass many different services and require collaboration with data scientists, business leaders, data stewards, developers, and SREs. An ML system’s complexity needs to be balanced by reducing complexity in the data ecosystem that feeds it. Industrializing data and AI to execute at speed requires some level of standardization.

A standardized data core must support different types of real-time data patterns (i.e. streaming, messaging, and queueing) and a wide variety of different data types while maintaining agility. ML systems that have complex, disparate data systems feeding them make the ML system rigid and fragile. As AI is scaled and grows across business lines, can the current technology stack, processes, and architecture scale to match it?

Real-time decisioning with a machine learning engine does not occur without the ability to manage real-time streaming, messaging, and queueing data at scale and speed. The Architect’s Guide to Real-TIme AI with NoSQL is a great resource to share with your enterprise architects. It contains an overview of the use cases, best practices, and reference architectures for applying NoSQL data technology to real-time machine learning and AI-powered applications.

The right underlying architecture is essential in building out a real-time AI platform. A common problem: fundamental architectural issues are often not discovered when the ML environment is built. Poor architecture surfaces when growing and maintaining the environment, and manifests in how quickly you can update models and how easy it is to monitor, measure, and scale processes, workflow, and deployment methods. The foundation of a real-time architecture is worth its weight in gold here.

An operating model for a business must deliver business value. Here are a few characteristics to consider as your teams build out a digital operating model:

- An operating architecture that leverages a cloud-native approach for DevOps, DataOps, and MLOps to support growth in scale and scope across lines of business and business operating models.

- A holistic strategy and view of a data and AI ecosystem. There are too many components and stakeholders for a disparate set of technologies and vertical siloed views to succeed in the AI race for customers and markets.

- A core real-time data ecosystem in which the data ingestion platform and databases seamlessly work together

- A real-time data platform strategy that can support a certain level of standardization, and a data architecture that’s flexible enough to support different machine learning engines and inference services, such as Vertex AI, Amazon SageMaker, and TensorFlow.

- Open-source technology for innovation, operational flexibility, and managing unit costs

- Scalability with a distributed architecture.

- Multi-purpose and cloud agnostic. Cloud capabilities are critical for a digital operating system. However, it’s critical to be data- and AI-driven to leverage cloud capabilities. There is a big difference with different outcomes between executing a data-driven strategy by leveraging cloud capabilities versus executing a cloud strategy with data.

The AI accelerants: ChatGPT and vector search

The arrival of ChatGPT and vector search capabilities in databases is accelerating the current speed of change. Imagine a world where an LLM agent like ChatGPT is granted access to data stored in a database that has vector search, which is an innovative and powerful approach to searching for and retrieving data.

Integrating ChatGPT with a home improvement store’s product catalog could enable a consumer to query ChatGPT: “I’m building a 10×20 deck this weekend. I want to use 5-inch Cedar planks with steel reinforcing beams. What do I need?” The app could respond with a bill of materials, the store location closest to the user’s home, and the availability of all components, and enable the consumer to buy and schedule a pickup where everything has been pulled for them in the loading dock. The app could also suggest how long it might take to complete the project, based on local permit requirements, and how long it might take for the stain to dry (based on local weather trends).

This isn’t science fiction. It’s a simple example of how LLMs will become part of the application stack in real-time and of leveraging large amounts of data stored in a highly scalable database. Streaming data, vector search in a database, and AI are all combining to change the art of the possible with the customer experience in real-time. All of this can be done with a database that is AI capable with vector search, an LLM plug-in and less than 100 lines of code with no model training. The AI race is definitely speeding up. Are you leveraging these capabilities? Are your competitors?

Wrapping up

A real-time AI platform that reduces the complexity of aligning streaming data, an operational real-time data store and ML/AI is the foundation of a digital operating model. Vector search capabilities with a vector database will become part of the foundation for real-time AI platforms. Applications, data, and AI are being implemented together more and more. A real-time AI platform must be designed to seamlessly bring data and AI together. All the data that drives AI needs to be processed, integrated, and persisted to execute with speed and scale while maintaining low latency as an environment grows.

The potential for exponential growth must be architected into a digital environment with a defined digital operating model; a real-time AI platform helps with this.

Learn how DataStax enables real-time AI.

About George Trujillo

George Trujillo is principal data strategist at DataStax. Previously, he built high-performance teams for data-value driven initiatives at organizations including Charles Schwab, Overstock, and VMware. George works with CDOs and data executives on the continual evolution of real-time data strategies for their enterprise data ecosystem.