Photo Credit: Google

Google I/O 2024 is underway with several new generative media models and tools showcased this year. The Music AI sandbox raises questions about whether these generative models will impact businesses that license sound to creators.

In a collaboration with YouTube and some artists, songwriters, and producers, Google has developed a suite of music AI tools it is calling Music AI Sandbox. Google says the new tools are designed to “open a new playground for creativity” and giving people a way to create instrumental sections from scratch with no prior music knowledge needed.

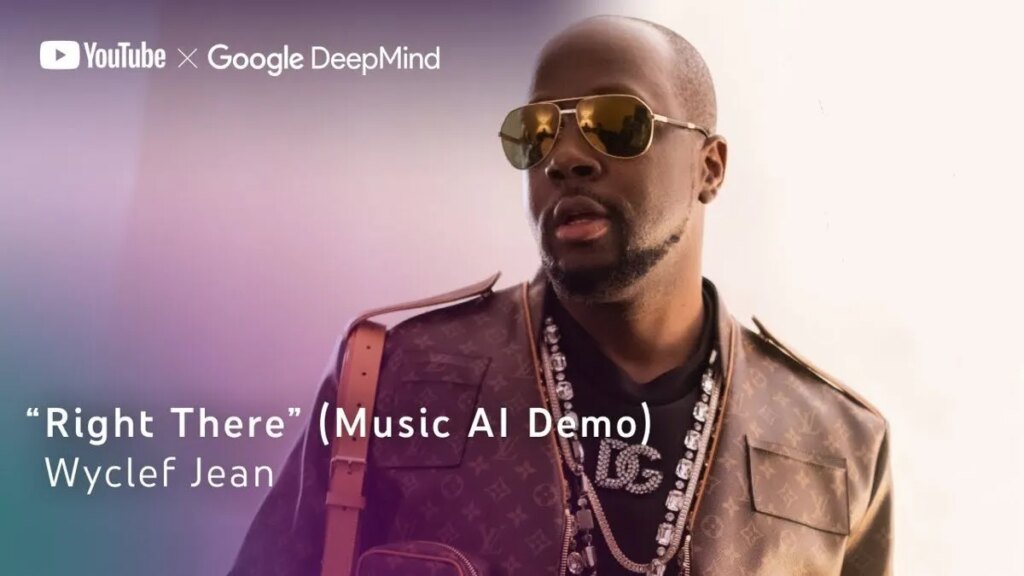

Wyclef Jean, Justin Tranter, and Marc Rebillet are on board—releasing new demo recordings on their YouTube channels that were created with help from Google’s music AI tools.

Alongside the demo reveal comes a statement about being responsible with new AI tech. “For each of these technologies, we’ve been working with the creative community and other external stakeholders, gathering insights and listening to feedback to help us improve and deploy our technologies in safe and responsible ways,” the blog post reads. “We’ve been conducting safety tests, applying filters, setting guardrails, and putting our safety teams at the center of development.”

While Google is hard at work on creating an AI music sandbox, lawsuits will hammer out the argument of how much ‘fair use’ there is behind using copyrighted data to train these models. ‘Fair use’ analysis is highly dependent on several factors, so it remains to be seen of these models can claim fair use under copyright law.

One factor courts will likely consider is the purpose and character of the use, whether the use is commercial or non-profit, and whether it is transformative and adds something new to the copyrighted work. Non-commercial uses may fall under the fair use label, but commerciality is just one aspect of determining fair use. AI trained on copyrighted works are often used for commercial purposes—OpenAI, Midjourney, and Anthropic all feature a subscription to access these models.

The second factor here that courts will analyze is the nature of the copyrighted work—whether it is factual or creative. This is where AI companies will run into trouble because models like the Music AI Sandbox are trained on highly creative works including visual art, music, and writing. When the underlying work is creative, that weighs against a finding of fair use.

The last factor here is the amount of substantiality of copyrighted works as a whole. Using the entirety of a copyrighted work is almost always weighted against a fair use judgement—especially when multiple works are copied. Training generative media AI on music means using a copy of multiple copyrighted works to create quality training data.

OpenAI argues that it is not the amount of work copied, but the amount of copyrighted work ‘made available to the public.’ It admits that training on copyrighted material is “reasonably necessary” to create an accurate generative media AI—but it argues that this substantial copying should not matter if the material is not publicly available.

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : DigitalMusicNews – https://www.digitalmusicnews.com/2024/05/15/google-deepmind-music-ai-sandbox/