The U.S. Food and Drug Administration (FDA) is preparing to evaluate the use of chatbot technology as a potential tool for mental health treatment, according to a recent report by Politico. This move signals a significant shift in how digital health innovations could be integrated into mental healthcare, as the agency explores regulatory frameworks for AI-powered therapeutic applications. The FDA’s consideration comes amid growing interest in leveraging artificial intelligence to address the rising demand for accessible and scalable mental health support.

FDA Evaluates Efficacy and Safety of AI-Powered Chatbot Therapy for Mental Health

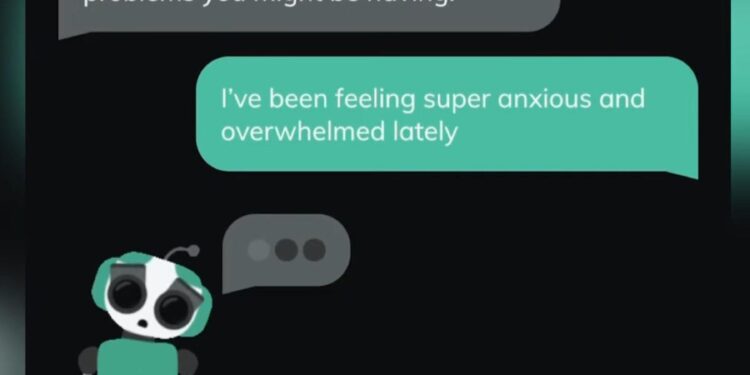

The U.S. Food and Drug Administration (FDA) has embarked on a thorough review to assess the effectiveness and safety of AI-powered chatbot therapy as an innovative tool for addressing mental health concerns. This evaluation is part of a broader push toward integrating digital solutions into healthcare, particularly in areas where accessibility and stigma remain significant barriers. Early studies indicate that these chatbots could offer continuous, personalized support to individuals suffering from anxiety, depression, and other mood disorders, potentially complementing traditional therapy sessions.

Key factors under FDA scrutiny include:

- Accuracy and reliability of diagnostic algorithms

- User data privacy and security measures

- Effectiveness in delivering cognitive-behavioral therapy (CBT)

- Potential risks of misdiagnosis or delayed professional treatment

| Evaluation Criteria | Expected Outcome |

|---|---|

| Therapeutic effectiveness | Reduction in symptom severity |

| User engagement | Consistent daily interaction |

| Safety protocols | Minimal adverse events |

| Privacy compliance | Full HIPAA adherence |

Potential Impact on Patient Access and Mental Health Care Delivery

The integration of FDA-approved chatbot therapy could revolutionize patient access to mental health services, particularly for underserved populations. By offering immediate, 24/7 support, these digital tools can reduce wait times for traditional therapy and provide an accessible alternative for those hesitant to seek in-person treatment. Cost efficiency and convenience could also lower barriers for patients in rural or remote areas, where mental health professionals are often scarce. However, questions remain about how these AI-driven solutions will complement or potentially disrupt existing care frameworks.

Mental health care delivery may see significant shifts as chatbots become part of the clinical landscape. Providers might use them for monitoring patient progress and flagging crisis situations, allowing clinicians to focus on complex cases. Yet, challenges related to patient data privacy, the accuracy of emotional assessments, and the risk of algorithmic bias require careful regulation and transparency. Below is a concise overview of potential benefits and concerns that stakeholders are weighing:

| Potential Benefits | Key Concerns |

|---|---|

| Expanded access in remote areas | Data security and confidentiality |

| Reduced wait times for therapy | Reliability of AI emotional interpretation |

| Cost-effective alternative to traditional care | Ensuring equitable treatment across demographics |

| Continuous patient engagement and monitoring | Integration with human-led healthcare teams |

Experts Call for Rigorous Oversight and Clear Regulatory Guidelines

Leading mental health professionals and technology experts emphasize the critical need for transparent and enforceable regulatory frameworks as the FDA evaluates chatbot therapy applications. They warn that without clearly defined guidelines, the risks of misdiagnosis, privacy breaches, and inadequate treatment protocols could undermine patient safety and public trust. Experts advocate for regulatory models that not only ensure efficacy but also incorporate ongoing oversight, data security standards, and ethical considerations tailored to AI-driven mental health solutions.

Several key concerns have been raised regarding chatbot therapy’s integration into mainstream care:

- Accountability: Defining who is responsible for adverse outcomes when AI tools fail.

- Validation: Establishing uniform benchmarks for clinical effectiveness and accuracy.

- Patient Consent: Enhancing transparency about data usage and AI limitations.

- Bias Mitigation: Ensuring diverse and representative data sets to prevent discriminatory outcomes.

| Regulatory Focus | Potential Impact |

|---|---|

| Clinical Validation | Improves patient safety and treatment efficacy |

| Data Privacy Standards | Protects sensitive information and builds trust |

| Ethical Usage Guidelines | Prevents misuse and discrimination |

| Ongoing Oversight | Addresses emerging risks and technology updates |

Insights and Conclusions

As the FDA moves toward evaluating chatbot therapy for mental health, the agency’s decision could mark a significant shift in how digital tools are integrated into mental healthcare. Stakeholders across the healthcare sector will be watching closely, as regulatory approval may pave the way for broader acceptance and use of artificial intelligence-driven treatments. The outcome of this consideration has the potential to reshape patient care, accessibility, and the future landscape of mental health services.