In legal battles where scientific evidence plays a pivotal role, the line between correlation and causation often becomes blurred-sometimes with far-reaching consequences. Experts and analysts are increasingly calling for courts to sharpen their understanding of this fundamental distinction, emphasizing that conflating the two can lead to misguided verdicts and policies. The Genetic Literacy Project highlights this growing concern, urging the judicial system to adopt a more rigorous approach when evaluating scientific claims, particularly those involving genetics and public health. As science progresses and the complexity of data intensifies, the imperative for clearer standards in the courtroom has never been more pressing.

The Challenges of Interpreting Scientific Evidence in Legal Proceedings

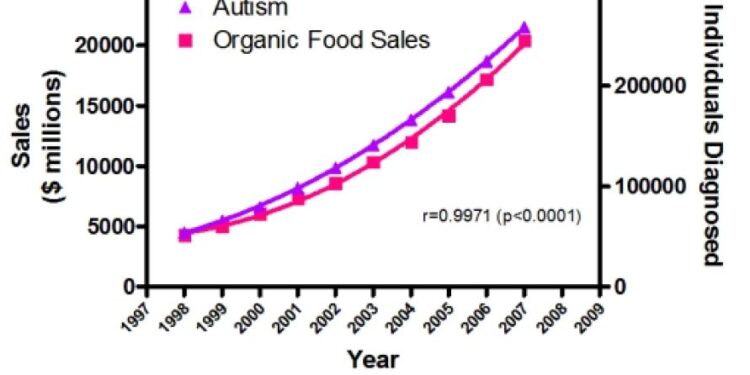

Courts often face the daunting task of evaluating scientific evidence that hinges on complex statistical relationships. A frequent pitfall is mistaking correlation for causation, which can lead to significant misinterpretations in legal judgments. Many studies presented in trials show associations-such as a genetic variant linked to a particular condition-but these associations don’t necessarily prove a direct cause-effect relationship. The distinction is critical because while correlations may indicate a potential link, causation requires rigorous proof that the factor in question directly influences the outcome, independent of confounding variables or biases.

Moreover, the challenges are compounded by varying levels of scientific literacy among legal professionals and judges, who must make decisions based on intricate data. Factors that contribute to misunderstandings include:

- Lack of standardized criteria for evaluating scientific causality in courts

- The use of anecdotal evidence or isolated studies instead of comprehensive meta-analyses

- Misapplication of statistical significance as synonymous with practical causality

- Pressure to resolve cases quickly, leading to oversimplification of scientific nuances

| Evidence Type | Common Misinterpretation | Legal Implication |

|---|---|---|

| Correlation Study | Assumed direct cause | Unjust liability or dismissal |

| Case Reports | Overgeneralization | Faulty precedent |

| Meta-analysis | Dismissed as complex | Missed nuance in rulings |

Understanding the Critical Difference Between Correlation and Causation in Court

In legal proceedings, the distinction between correlation and causation is often blurred, leading to potentially flawed judgments. While correlation indicates a relationship between two variables, it does not prove that one causes the other. Courts frequently encounter expert testimony where statistical associations are presented as direct evidence of causality, yet without rigorous scientific validation, such claims risk oversimplifying complex data. This misunderstanding can impact verdicts in cases ranging from medical malpractice to environmental litigation, where establishing a clear cause-and-effect link is crucial.

To clarify this, experts suggest adopting a checklist approach that judges and juries can use to evaluate evidence involving statistical data:

- Strength of Association: How strong is the observed relationship?

- Consistency: Has the relationship been observed repeatedly under different circumstances?

- Temporal Sequence: Did the alleged cause precede the effect?

- Biological Plausibility: Is there a scientifically plausible mechanism?

| Factor | Example | Relevance to Court Cases |

|---|---|---|

| Correlation | Ice cream sales and drowning rates rise in summer | Misinterpreting linked events without causal basis |

| Causation | Smoking leading to lung cancer proven by multiple studies | Link with strong scientific evidence supporting legal claims |

By fostering a deeper understanding of these principles, the justice system can improve its ability to discern whether evidence truly supports claims of causality or merely reflects coincidental associations. This evolution is essential in cases heavily reliant on scientific data, ensuring fairer and more accurate outcomes.

Recommendations for Strengthening Scientific Literacy Among Judges and Attorneys

To enhance judicial and legal decision-making in cases involving scientific evidence, targeted efforts must be made to deepen the understanding of fundamental concepts such as correlation versus causation. This can be achieved through specialized training programs designed specifically for judges and attorneys that focus on the nuances of scientific methodology, statistical reasoning, and the interpretation of complex data. Emphasizing practical case studies where misguided assumptions about causality led to faulty verdicts can foster critical thinking and vigilance among legal professionals.

Moreover, collaboration with scientific experts should be institutionalized rather than ad hoc. Creating interdisciplinary advisory panels that include geneticists, statisticians, and epidemiologists can provide real-time guidance during trials where scientific claims are central. Courts could benefit from clear criteria for evaluating expert testimony-criteria that would include:

- Validation of scientific methods used in studies presented as evidence

- Assessment of study design bias and limitations

- Recognition of alternative explanations for observed correlations

| Key Skill | Purpose | Impact |

|---|---|---|

| Basic Statistics | Understand significance vs. noise | Less misinterpretation of data |

| Scientific Method | Evaluate evidence reliability | More impartial rulings |

| Expert Collaboration | Clarify complex scientific claims | Improved accuracy in verdicts |

The Way Forward

As legal systems increasingly grapple with scientific evidence, the need to clearly differentiate correlation from causation has never been more critical. Misinterpretations can lead to flawed judgments, with significant consequences for justice and public policy. Bridging the gap between scientific nuance and legal standards will require ongoing dialogue, education, and collaboration. Only through a deeper understanding of how science works can courts ensure that decisions are informed, fair, and grounded in accurate interpretations of data.