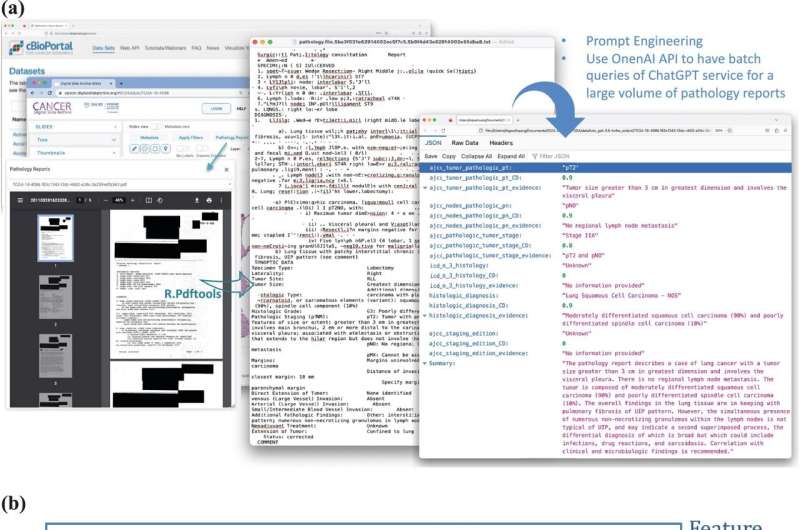

An overview of the process and framework of using ChatGPT for structured data extraction from pathology reports. a Illustration of the use of OpenAI API for batch queries of ChatGPT service, applied to a substantial volume of clinical notes—pathology reports in our study. b A general framework for integrating ChatGPT into real-world applications. Credit: npj Digital Medicine (2024). DOI: 10.1038/s41746-024-01079-8

ChatGPT, the artificial intelligence (AI) chatbot designed to assist with language-based tasks, can effectively extract data for research purposes from physicians’ clinical notes, UT Southwestern Medical Center researchers report in a new study.

Their findings, published in npj Digital Medicine, could significantly accelerate clinical research and lead to new innovations in computerized clinical decision-making aids.

“By transforming oceans of free-text health care data into structured knowledge, this work paves the way for leveraging artificial intelligence to derive insights, improve clinical decision-making, and ultimately enhance patient outcomes,” said study leader Yang Xie, Ph.D., Professor in the Peter O’Donnell Jr. School of Public Health and the Lyda Hill Department of Bioinformatics at UT Southwestern.

Dr. Xie is also Associate Dean of Data Sciences at UT Southwestern Medical School, Director of the Quantitative Biomedical Research Center, and a member of the Harold C. Simmons Comprehensive Cancer Center.

Much of the research in the Xie Lab focuses on developing and using data science and AI tools to improve biomedical research and health care. She and her colleagues wondered whether ChatGPT might speed the process of analyzing clinical notes—the memos physicians write to document patients’ visits, diagnoses, and statuses as part of their medical record—to find relevant data for clinical research and other uses.

Clinical notes are a treasure trove of information, Dr. Xie explained; however, because they are written in free text, extracting structured data typically involves having a trained medical professional read and annotate them. This process requires a huge investment of time and often resources—and can also introduce human bias.

Existing programs that use natural language processing require extensive human annotation and model training. As a result, clinical notes are largely underused for research purposes.

To determine whether ChatGPT could convert clinical notes to structured data, Dr. Xie and her colleagues had it analyze more than 700 sets of pathology notes for lung cancer patients to find the major features of primary tumors, whether lymph nodes were involved, and the cancer stage and subtype.

Overall, Dr. Xie said, the average accuracy of ChatGPT to make these determinations was 89%, based on reviews by human readers.

Their analysis took several weeks of full-time work compared with the few days it took to fine-tune data extraction from the ChatGPT model. This accuracy was significantly better than other traditional natural language processing methods tested for this use.

To test whether this approach is applicable to other diseases, Dr. Xie and her colleagues used ChatGPT to extract information about cancer grade and margin status from 191 clinical notes on patients from Children’s Health with osteosarcoma, the most common type of bone cancer in children and adolescents. Here, ChatGPT returned information with nearly 99% accuracy on grade and 100% accuracy on margin status.

Dr. Xie noted that the results were strongly influenced by what prompts ChatGPT was given to perform each task—a phenomenon called prompt engineering. Providing multiple options to choose from, giving examples of appropriate responses, and directing ChatGPT to rely on evidence to draw conclusions improved its performance.

She added that using ChatGPT or other large language models to extract structured data from clinical notes could not only speed clinical research but also help clinical trial enrollment by matching patients’ information to clinical trial protocols. However, she said, ChatGPT won’t replace the need for human physicians.

“Even though this technology is an extremely promising way to save time and effort, we should always use it with caution. Rigorous and continuous evaluation is very important,” Dr. Xie said.

More information:

Jingwei Huang et al, A critical assessment of using ChatGPT for extracting structured data from clinical notes, npj Digital Medicine (2024). DOI: 10.1038/s41746-024-01079-8

Citation:

Study shows ChatGPT can accurately analyze medical charts for clinical research, other applications (2024, May 13)

retrieved 13 May 2024

from https://medicalxpress.com/news/2024-05-chatgpt-accurately-medical-clinical-applications.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : Medical Xpress – https://medicalxpress.com/news/2024-05-chatgpt-accurately-medical-clinical-applications.html