The internet is filled with personal artifacts, much of which can linger online long after someone dies. But what if those relics are used to simulate dead loved ones? It’s already happening, and AI ethicists warn this reality is opening ourselves up to a new kind of “digital haunting” by “deadbots.”

People have attempted to converse with deceased loved ones through religious rites, spiritual mediums, and even pseudoscientific technological approaches for millennia. But the ongoing interest in generative artificial intelligence presents an entirely new possibility for grieving friends and family—the potential to interact with chatbot avatars trained on a deceased individual’s online presence and data, including voice and visual likeness. While still advertised explicitly as digital approximations, some of the products offered by companies like Replika, HereAfter, and Persona can be (and in some cases already are) used to simulate the dead.

And while it may be difficult for some to process this new reality, or even take it seriously, it’s important to remember the “digital afterlife” industry isn’t just a niche market limited to smaller startups. Just last year, Amazon showed off the potential for its Alexa assistant to mimic a deceased loved one’s voices using only a short audio clip.

[Related: Watch a tech billionaire talk to his AI-generated clone.]

AI ethicists and science-fiction authors have explored and anticipated these potential situations for decades. But for researchers at Cambridge University’s Leverhulme Center for the Future of Intelligence, this unregulated, uncharted “ethical minefield” is already here. And to drive the point home, they envisioned three, fictional scenarios that could easily occur any day now.

In a new study published in Philosophy and Technology, AI ethicists Tomasz Hollanek and Katarzyna Nowaczyk-Basińska relied on a strategy called “design fiction.” First coined by sci-fi author Bruce Sterling, design fiction refers to “a suspension of disbelief about change achieved through the use of diegetic prototypes.” Basically, researchers pen plausible events alongside fabricated visual aids.

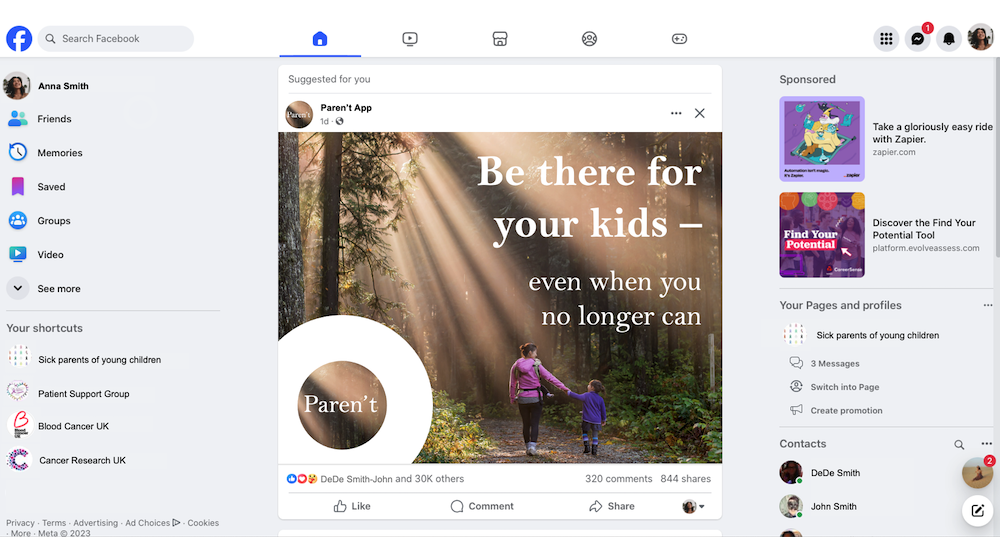

For their research, Hollanek and Nowaczyk-Basińska imagined three hyperreal scenarios of fictional individuals running into issues with various “postmortem presence” companies, and then made digital props like fake websites and phone screenshots. The researchers focused on three distinct demographics—data donors, data recipients, and service interactants. “Data donors” are the people upon whom an AI program is based, while “data recipients” are defined as the companies or entities that may possess the digital information. “Service interactants,” meanwhile, are the relatives, friends, and anyone else who may utilize a “deadbot” or “ghostbot.”

A fake Facebook ad for a fictional ‘ghostbot’ company. Credit: Tomasz Hollanek

A fake Facebook ad for a fictional ‘ghostbot’ company. Credit: Tomasz Hollanek

In one piece of design fiction, an adult user is impressed by the realism of their deceased grandparent’s chatbot, only to soon receive “premium trial” and food delivery service advertisements in the style of their relative’s voice. In another, a terminally ill mother creates a deadbot for their eight-year-old son to help them grieve. But in adapting to the child’s responses, the AI begins to suggest in-person meetings, thus causing psychological harm.

In a final scenario, an elderly customer enrolls in a 20-year AI program subscription in the hopes of comforting their family. Because of the company’s terms of service, however, their children and grandchildren can’t suspend the service even if they don’t want to use it.

“Rapid advancements in generative AI mean that nearly anyone with internet access and some basic know-how can revive a deceased loved one,” said Nowaczyk-Basińska. “At the same time, a person may leave an AI simulation as a farewell gift for loved ones who are not prepared to process their grief in this manner. The rights of both data donors and those who interact with AI afterlife services should be equally safeguarded.”

[Related: A deepfake ‘Joe Biden’ robocall told voters to stay home for primary election.]

“These services run the risk of causing huge distress to people if they are subjected to unwanted digital hauntings from alarmingly accurate AI recreations of those they have lost,” Hollanek added. “The potential psychological effect, particularly at an already difficult time, could be devastating.”

The ethicists believe certain safeguards can and should be implemented as soon as possible to prevent such outcomes. Companies need to develop sensitive procedures for “retiring” an avatar, as well as maintain transparency in how their services work through risk disclaimers. Meanwhile, “re-creation services” need to be restricted to adult users only, while also respecting the mutual consent of both data donors and their data recipients.

“We need to start thinking now about how we mitigate the social and psychological risks of digital immortality,” Nowaczyk-Basińska argues.

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : Popular Science – https://www.popsci.com/technology/digital-haunting-chatbots/