There are some signs to look out for when it comes to synthetic content. Image: Andrea De Santis/Unsplash

Generative AI is filling up the web at a rapid rate, and while we’re weighing up the consequences for education, energy, and human creativity, it’s making it much harder to trust anything that appears online. Is it real, or is it AI-generated?

For the moment, we don’t have a 100 percent reliable way of detecting AI content every single time, but there are some tell-tale signs of computer-generated text, audio, images, and videos that are worth looking out for. Add in a little human intelligence, and it’s usually possible to tell when there’s a good chance something is AI generated.

Here we’re going to focus on AI-generated video, produced by tools such as OpenAI’s Sora, and we’ve included some examples as well. The next time you come across a video you’re not sure about, see how it stacks up against these criteria.

Bad text

You’ll notice text is missing from a lot of AI videos (and images). Generative AI doesn’t really do text well, because it doesn’t understand letters or language—at least, not like humans do. AI signs often look like they’re written in an alien language, so look out for garbled text, or no text at all.

That’s not to say good text won’t appear in AI videos, but if it does, it’s probably been added in after. In this Monster Camp trailer (embedded below) generated by Luma AI, some of the signage looks fine (and has most likely been added manually)—check out the lettering on the bus and the fair stalls for some gibberish. You’ll need to look carefully, because the weird text isn’t visible for long.

Quick (or slow) cuts

That brings us to another hallmark of AI-generated video: You’ll often notice cuts are very short and the action moves very quickly. In part, this is to hide inconsistencies and inaccuracies in the videos you’re being shown—the idea is to give you the impression of something that’s real, rather than the real thing itself.

On the other hand—and to contradict what we just said—sometimes the action will be slowed right down. However, the end goal is the same: To stop the seams from showing at the edge of the AI’s capabilities.

In this AI-generated music video (embedded below) from Washed Out, it’s the former: Everything is quick and fast and gone before you can take a proper look at it. Try pausing the video at different points to see how much weirdness you can spot (we noticed at least one person merging into a wall).

Bad physics

Generative AI knows how to mash together moving pixels to show something resembling a cat, or a city, or a castle—it does not, however, understand the world, 3D space, or the laws of physics. People will vanish behind buildings, or look different between scenes, buildings will be constructed in weird ways, furniture won’t line up properly, and so on.

Consider this drone shot (embedded below) created by Sora from OpenAI. Keep your eyes on the gaggle of people walking towards the bottom of the scene in the lower left-hand corner: They all absorb each other and finally blend into the railings because the AI is seeing them as pixels rather than people.

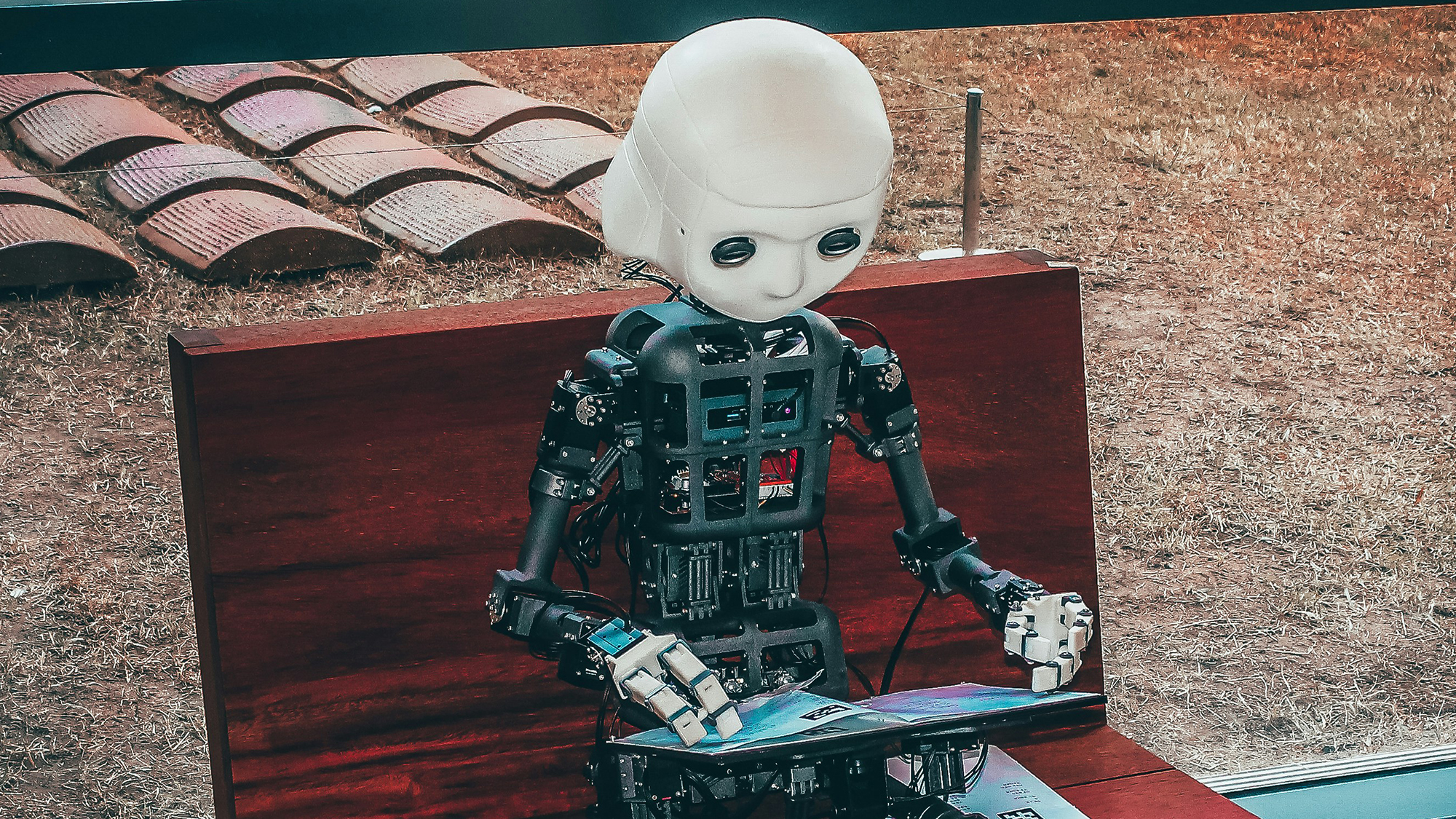

The uncanny valley

AI video often has a sheen that seems unnatural, and we’re already familiar with the phrase “uncanny valley” when it comes to computer-generated graphics that attempt to reproduce what’s real and natural. You’ll often be visiting the uncanny valley when watching AI videos, even if it’s just for brief moments.

If you have a look at the brand film made by Toys R Us with the help of Sora AI, you’ll see the smile and the hair movements of the young kid look suspiciously unnatural. It also looks like a different kid from scene to scene, because he’s not an actor: He’s a generated 2D representation based on what the AI thinks a boy should probably look like.

Perfect (or imperfect) elements

This is another one where there’s something of a contradiction, because AI videos can be given away by elements that are too perfect or not perfect enough. These clips are generated by computers after all, so designs on buildings, vehicles, or materials might be repeated again and again, in patterns too flawless to exist in real life.

On the other hand, AI continues to struggle with anything natural, whether it’s a hand, a chin, or leaves blowing in the breeze. In this video from Runway AI of a running astronaut, you’ll notice the hands are a mess (as well as a lot of the background physics being wrong, and the text being smudged).

Check the context

You’ve then got all the tools we already had for identifying misinformation: Even before there was generative AI, there was Photoshop, so some of the rules of trying to spot fakes remain the same.

Context is key—if a video is coming from the New York Times official social media account, then it’s most likely trustworthy. If it’s being forwarded on by a faceless person on X with a lot of numbers in their username, perhaps less so.

Check to see if the action in the video has been captured from other angles, or has been widely reported elsewhere, or actually makes any sense. You can also check with the people being featured in the video: If there’s some Morgan Freeman narration in the background, Morgan Freeman will be able to verify it one way or the other.

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : Popular Science – https://www.popsci.com/diy/how-to-spot-ai-generated-video/