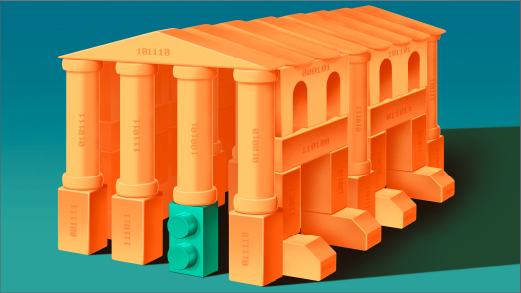

Theoretical computer scientists deal with complicated ideas. But whenever possible, they’d prefer to work with simpler ones. A 2009 tool known as the regularity lemma gives them a great way to do this. It effectively lets them break a given computational problem or function into simpler pieces.

For computational complexity theorists, who study the relative hardness of different problems, this ability to simplify has long helped them understand unwieldy mathematical functions. But certain problems with complex pieces have still defied analysis.

Now, new work provides a way to analyze those hard-to-understand problems. The advance comes from an unexpected area of computer science: algorithmic fairness, where algorithms like those used by banks and insurance companies are scrutinized to make sure they treat people fairly. The new results show that the fairness tools can effectively map out the different parts of a hard problem and isolate the precise regions of the problem that make it hard to solve.

“It’s really a fantastic work. I think it’s super exciting,” said Michael Kim, a computer scientist at Cornell University who helped build one of the fairness tools that was repurposed in the new work. “As a theorist who’s working in these spaces, it’s kind of an ideal outcome that somebody takes your work from one area and applies it to something else.”

Precision Forecasts

As institutions have become more comfortable using algorithms to decide who gets a bank loan, for example, or who should receive parole, it’s become more important to have a formal way of checking that human biases aren’t creeping into the calculations. But there’s more than one way to measure what’s fair.

Start with the general problem of measuring the accuracy of a prediction. Let’s say you come up with a computer program that predicts if it’s going to rain in your city, and you want to measure its accuracy. Let’s also say that it tends to rain on about 40% of the days in a year. If you use a fairness tool called multiaccuracy, your algorithm could be considered accurate if it makes an average prediction that is close to 40%. This could be achieved if the algorithm predicts a 40% chance of rain on every day of the year, or if it predicts 100% rainfall on just 40% of the days (since the average would be the same). Ask it to be more specific though — will it rain on Tuesday? — and the same algorithm may not be accurate.

Now consider an algorithm that predicts whether loan applicants are likely to make all their payments. It’s not good enough to have an algorithm that predicts the correct general rate — the 40% chance of rain in our example above. It needs to predict the rate for specific individuals across different population groups in a way that’s both accurate and fair.

Accurate predictions typically drop as you add layers of complexity, like a specific date for the weather forecast, or a specific person that’s applying for a loan. Real-life situations quickly become too complex for multiaccuracy to be the best way of measuring them.

In 2018, Kim and other fairness researchers came up with a new and stronger fairness paradigm called multicalibration, which accounts for these layers of complexities. This approach enforces “calibrated” predictions — basically, each layer of complication is accounted for in the system. Multicalibration means that an algorithm’s predictions are accurate whether you’re looking at all days, or just Tuesday. Or whether you’re making loan predictions about all people, or just certain types of people. Multicalibration should guarantee fairness across the board.

But its usefulness didn’t end there.

Beyond Fairness

Last year, a team of theoretical computer scientists saw a chance to bring these tools into a different field. They showed how multiaccuracy and multicalibration were equivalent to existing theorems in graph theory, a mathematical discipline that studies relationships between objects. It made Salil Vadhan, a computer scientist at Harvard University, wonder where else the tool might be useful.

“We saw they were getting the mileage [using] multicalibration in graph theory,” said Vadhan, one of the authors of the 2009 regularity lemma as well as the newest work. “Now let’s try to do it for complexity theory as well.” He teamed up with his Harvard colleague Cynthia Dwork, who was also one of the authors of the graph theory paper, and their undergraduate student Sílvia Casacuberta (who is now a graduate student at the University of Oxford).

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : Quanta Magazine – https://www.quantamagazine.org/the-question-of-whats-fair-illuminates-the-question-of-whats-hard-20240624/