The 2024 Nobel Prize in Physics

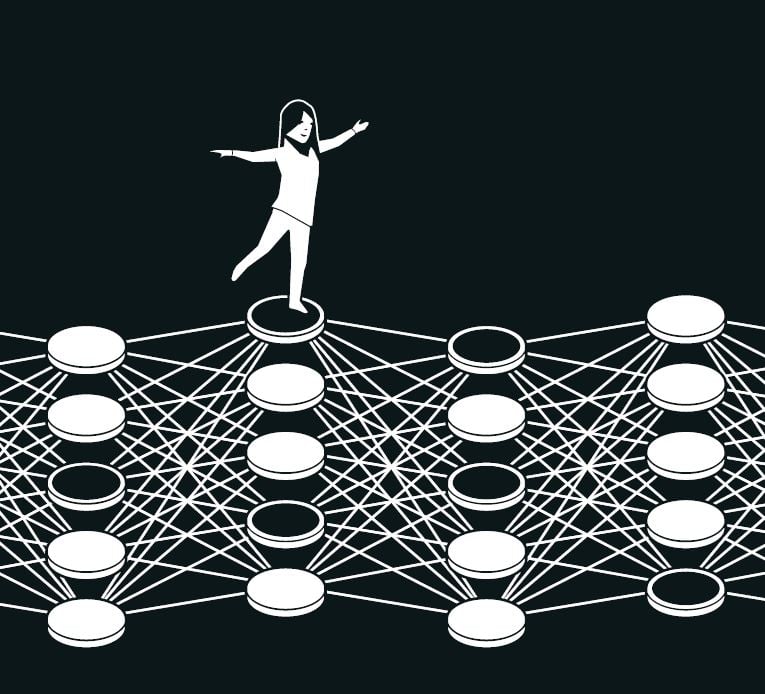

The physicists honored this year made significant strides in formulating methods that have paved the way for cutting-edge advancements in machine learning. John Hopfield devised a framework capable of storing and reconstructing data, while Geoffrey Hinton developed an approach that autonomously uncovers patterns within datasets, a cornerstone for contemporary large-scale artificial neural networks.

Harnessing Physics to Reveal Data Patterns

© Johan Jarnestad/The Royal Swedish Academy of Sciences

Many individuals are aware of experiences like computer-assisted language translation, image recognition, and even conversational agents. However, the role of such technology has been critical in research fields involving the systematic sorting and interpretation of extensive datasets. The expansion of machine learning over the last fifteen to twenty years employs architectures known as artificial neural networks. Today, references to artificial intelligence commonly pertain to these advancements.

Although machines lack cognition akin to human thought processes, they now replicate functionalities associated with memory and learning. This year’s physicists have effectively contributed to these innovations by leveraging foundational principles from physics to devise technologies enabling network structures for information processing.

Unlike conventional software that functions like a meticulously followed recipe—where ingredients (data) are systematically processed according to explicit instructions resulting in predefined outcomes—machine learning enables computers to learn through examples. This paradigm equips them with tools for addressing complex issues beyond basic procedural programming. A pertinent illustration is how such systems interpret images by identifying objects within them.

Emulating Neural Functionality

The design of an artificial neural network revolves around its comprehensive structure for processing information; it draws inspiration from understanding how biological brains operate. Beginning as early as the 1940s, mathematicians explored concepts underlying neuronal connections and synaptic actions within brains. Additionally, insights from psychology emerged through Donald Hebb’s theory positing that repeated collaborative neuron activities enhance their interconnections.

Efforts ensued throughout subsequent decades aiming at replicating neuronal behavior via artificial networks through computer simulations where nodes (representative neurons) adjust values based on strengthening or weakening connections (similarity) amongst them—principles still guiding modern training methodologies today.

© Johan Jarnestad/The Royal Swedish Academy of Sciences

The enthusiasm surrounding neural networks waned towards the late 1960s due largely to unpromising theoretical findings leading many practitioners into doubt regarding their practical applicability; however, renewed vigor entered this field during the 1980s catalyzed by groundbreaking contributions including those recognized this year.

Understanding Associative Memory

Picture attempting recollection of an infrequently used term—a descriptor for inclined surfaces commonly found in theaters or lecture spaces—you rummage mentally until you locate “ramp,” perhaps “rad” then struggles leading inevitably toward realization: it’s “rake!”

This reflective journey mirrors what John Hopfield conceptualized concerning associative memory back in 1982—the “Hopfield Network.” This architecture facilitates pattern storage along with mechanisms aimed at regeneration when presented incomplete or altered versions utilizing similarity assessments from previous inputs.

Previously possessing expertise rooted deeply within physics frameworks enabled Hopfield into exploratory realms connecting molecular biology projections upon attending neuroscience seminars exploring cerebral constructs further spurred intellectual curiosity regarding simple neural network dynamics whereby integrated behavior yields novel characteristics unseen among isolated elements therein established integrative components when working synergistically towards functional objectives…

Advancements in Hopfield Networks

Recent advancements by researchers like Hopfield have expanded the functionality of Hopfield networks, enabling nodes to store a range of values beyond binary states. If we visualize these nodes as pixels in an image, they can represent various colors instead of mere shades of black and white. Enhanced methodologies now allow for improved storage capacity for images, facilitating better differentiation even among those that are closely related. Consequently, it is possible to retrieve or reconstruct various types of information as long as they are derived from sufficient data points.

Understanding Image Recognition through Historical Physics Concepts

The process of remembering an image is straightforward; however, understanding its significance adds complexity.

Consider how very young children can identify various animals in their surroundings—confidently naming them as dogs or cats despite occasional errors. With just a few encounters with each animal type, they quickly categorize them accurately without formal lessons on taxonomy or biology. This ability stems from their interaction with the environment and repeated exposure to different stimuli.

During the time when Hopfield introduced his theories on associative memory, Geoffrey Hinton was at Carnegie Mellon University in Pittsburgh after gaining knowledge in experimental psychology and artificial intelligence across institutions in England and Scotland. He was exploring whether machines could emulate human pattern processing by autonomously defining categories for sorting data. Collaborating with Terrence Sejnowski, Hinton extended the concept behind the Hopfield network into a more advanced framework inspired by principles found within statistical physics.

The Role of Statistical Physics

Statistical physics examines systems composed primarily of similar elements—such as gas molecules—as opposed to focusing on individual entities where tracking becomes cumbersome or infeasible. Instead, collective properties such as pressure and temperature emerge from evaluating molecular behavior holistically over time; many pathways can lead to uniform characteristics within gases under varying speeds.

This approach allows for analyzing potential joint states among individual components while calculating their probabilities based on energy levels governed by Ludwig Boltzmann’s equation from the nineteenth century—a fundamental principle leveraged by Hinton’s innovations published under the compelling term “Boltzmann machine” back in 1985.

Learning New Patterns with Boltzmann Machines

A Boltzmann machine operates using two distinct node classifications: visible nodes receiving input data and hidden nodes that contribute additional insights toward overall network energy levels.

The device functions via a method that updates node values sequentially until reaching a stable state where patterns may evolve without altering overarching properties across the board; this results in specific probabilities associated with numerous configurations determined through Boltzmann’s principles once processing concludes—marking it as an early generative modeling example.

© Johan Jarnestad/The Royal Swedish Academy of Sciences

The power behind Boltzmann machines lies not merely within programmed instructions but also derives from experiential learning drawn during training sessions involving example patterns fed into visible nodes; higher frequency exposure increases likelihood profiles for particular patterns emerging upon execution afterward—similar to recognizing features shared between individuals upon meeting someone anew yet reminiscent regarding familial ties due to earlier acquaintance experiences!

Evolving Beyond Initial Limitations

The initial version faces limitations related largely tied up inefficiencies hindering rapid solution identification processes but further extensions explored extensively since have streamlined operations! Reducing connections between units has proven beneficial towards increasing efficiency rates significantly observed especially during experiments undertaken following mid-nineties evolution phases which received renewed interest prompted partly owing Garnered enthusiasm alongside contributions pivotal figures like Geoffrey Hinton brought forth amid groundbreaking work showcased since then!

“`

Notable Contributors to Machine Learning: A Brief Overview

John Hopfield

Born in 1933 in Chicago, Illinois, John Hopfield earned his doctorate from Cornell University in 1958. He currently holds a professorship at Princeton University in New Jersey, USA.

Geoffrey E. Hinton

Geoffrey Hinton was born in London, United Kingdom, in 1947. He completed his doctoral studies at The University of Edinburgh in 1978 and is presently a professor at the University of Toronto, Canada.

Acknowledgment for Pioneering Work

The pair received recognition “for groundbreaking discoveries and inventions that facilitate machine learning through artificial neural networks.” Their contributions have significantly shaped the field of artificial intelligence.

Text Contributor: Anna Davour

Translation Services: Clare Barnes

Iillustrations by: Johan Jarnestad

Editorship by: Sara Gustavsson

© The Royal Swedish Academy of Sciences

Citation Reference

You can cite this section as follows:

MLA style: “Popular information.” NobelPrize.org. Nobel Prize Outreach AB 2024. Accessed 10 Oct 2024.