TechSpot is celebrating its 25th anniversary. TechSpot means tech analysis and advice you can trust.

Forward-looking: We hear plenty of legitimate concerns regarding the new wave of generative AI, from the human jobs it could replace to its potential for creating misinformation. But one area that often gets overlooked is the sheer amount of energy these systems use. In the not-so-distant future, the technology could be consuming the same amount of electricity as an entire country.

Alex de Vries, a researcher at the Vrije Universiteit Amsterdam, authored ‘The Growing Energy Footprint of Artificial Intelligence,’ which examines the environmental impact of AI systems.

De Vries notes that the training phase for large language models is often considered the most energy-intensive, and therefore has been the focus of sustainability research in AI.

Following training, models are deployed into a production environment and begin the inference phase. In the case of ChatGPT, this involves generating live responses to user queries. Little research has gone into the inference phase, but De Vries believes there are indications that this period might contribute significantly to an AI model’s life-cycle costs.

According to research firm SemiAnalysis, OpenAI required 3,617 Nvidia HGX A100 servers, with a total of 28,936 GPUs, to support ChatGPT, implying an energy demand of 564 MWh per day. For comparison, an estimated 1,287 MWh was used in GPT-3’s training phase, so the inference phase’s energy demands were considerably higher.

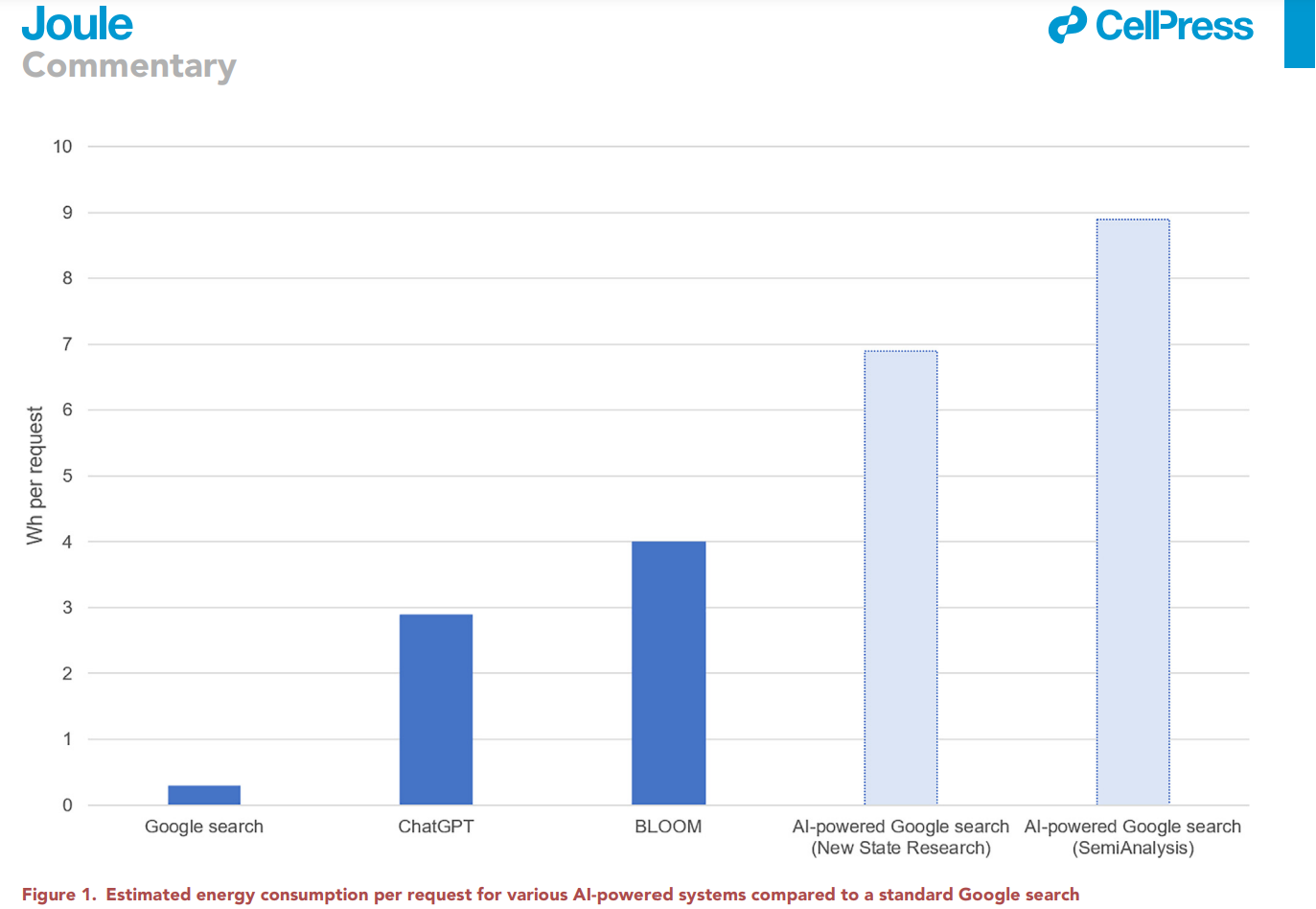

Google, which reported that 60% of AI-related energy consumption from 2019 to 2021 stemmed from inference, is integrating AI features into its search engine. Back in February, Alphabet Chairman John Hennessy said that a single user exchange with an AI-powered search service “likely costs ten times more than a standard keyword search.”

SemiAnalysis estimates that implementing an AI similar to ChatGPT into every single Google search would require 512,821 A100 HGX servers, totaling 4,102,568 GPUs. At a power demand of 6.5 kW per server, this would translate into a daily electricity consumption of 80 GWh and an annual consumption of 29.2 TWh, about the same amount used by Ireland every year.

Such a scenario is unlikely to happen anytime soon, not least because Nvidia doesn’t have the production capacity to deliver over half a million HGX servers and it would cost Google $100 billion to buy them.

Away from the hypothetical, the paper notes that market leader Nvidia is expected to deliver 100,000 of its AI servers in 2023. If operating at full capacity, these servers would have a combined power demand of 650 – 1,020 MW, consuming up to 5.7 – 8.9 TWh of electricity annually. That’s an almost negligible amount compared to the annual consumption of data centers (205 TWh). But by 2027, Nvidia could be shipping 1.5 million AI server units, pushing their annual energy consumption rates up to 85.4 – 134.0 TWh. That’s around the same amount as a country such as Argentina, Netherlands, or Sweden.

“It would be advisable for developers not only to focus on optimizing AI, but also to critically consider the necessity of using AI in the first place, as it is unlikely that all applications will benefit from AI or that the benefits will always outweigh the costs,” said De Vries.

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : TechSpot – https://www.techspot.com/news/100464-ai-energy-demands-could-soon-match-entire-electricity.html