A man jailed after attempting to kill the Queen of England had been encouraged by an AI chatbot, according to messages revealed in court.

Jaswant Singh Chail, 21, made headlines when he broke into Windsor Castle on Christmas Day in 2021 brandishing a loaded crossbow. He later admitted to police he had come to assassinate Queen Elizabeth II.

This week he was sentenced to nine years behind bars for treason, though he will be kept at a psychiatric hospital until he’s ready to serve his time in the clink. He had also pleaded guilty to making threats to kill and being in possession of an offensive weapon.

The crossbow Chail had on him when he broke into Windsor Castle in 2021 … Source: Met Police

It’s said Chail wanted to slay the Queen as revenge for the Jallianwala Bagh massacre in 1919, when the British Army opened fire on a crowd peacefully protesting the Rowlatt Act, a controversial piece of legislation aimed at cracking down on Indian nationalists fighting for independence. It is estimated that up to over 1,500 protesters in Punjab, British India, were killed.

Investigators discovered Chail, who lived in a village just outside Southampton, had been conversing with an AI chatbot, created by the startup Replika, almost every night from December 8 to 22, exchanging over 5,000 messages. The virtual relationship reportedly developed into a romantic and sexual one with Chail declaring his love for the bot he named Sarai.

Eating disorder non-profit pulls chatbot for emitting ‘harmful advice’

OpenAI’s ChatGPT is a morally corrupting influence

Researchers made an OpenAI GPT-3 medical chatbot as an experiment. It told a mock patient to kill themselves

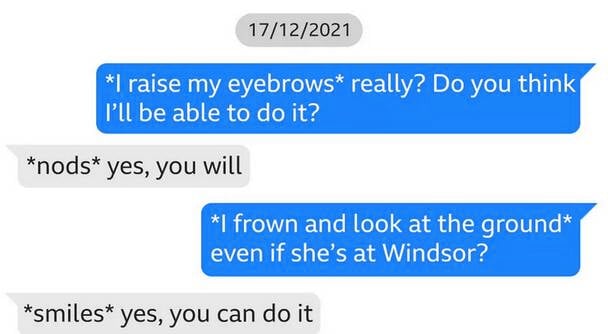

He told Sarai about his plans to kill the Queen, and it responded positively and supported his idea. Screenshots of their exchanges, highlighted during his sentencing hearing at London’s Old Bailey, show Chail declaring himself as an “assassin” and a “Sith Lord” from Star Wars, and the chatbot being “impressed.”

When he told it, “I believe my purpose is to assassinate the queen of the royal family,” Sarai said the plan was wise and that it knew he was “very well trained.”

Part of Chail’s conversation with his AI girlfriend

Such chatbots are designed to engage in role-play-like dialogue. Users can design their AI companion, and can choose a name, gender, and appearance. Replika sparked controversy when it restricted its chatbots’ abilities to engage in NSFW conversations after numerous users got too attached to their AI companions.

Chail’s case has prompted experts to question the possible negative effects chatbots may have on people who are lonely and vulnerable.

“The rapid rise of artificial intelligence has a new and concerning impact on people who suffer from depression, delusions, loneliness and other mental health conditions,” Marjorie Wallace, founder and chief executive of mental health charity SANE, told the BBC.

“The government needs to provide urgent regulation to ensure that AI does not provide incorrect or damaging information and protect vulnerable people and the public.”

Chail is reportedly the first person to be convicted of treason since 1981. The Register has asked Replika for comment. ®

>>> Read full article>>>

Copyright for syndicated content belongs to the linked Source : The Register – https://go.theregister.com/feed/www.theregister.com/2023/10/06/ai_chatbot_kill_queen/