Addressing Bias in AI: A New Era of Language Models

As artificial intelligence continues to influence our perception of reality, the subtleties within language models carry significant weight on societal views. Recent research utilizing an innovative data set has emerged as a crucial asset for scholars, offering essential insights into the harmful stereotypes that may be ingrained in large language models (LLMs). With these technologies becoming integral to our everyday experiences, it is increasingly vital to examine and confront the biases they might propagate. An insightful article from MIT Technology Review highlights this important resource, illustrating how it enables researchers to uncover and address damaging biases, thereby promoting a digital dialogue that mirrors a more just and inclusive society. Let’s explore the importance of this data set and its transformative potential for AI-driven communication.

Revealing Bias: The Data Set’s Role in Exposing Stereotypes

While language models possess remarkable capabilities, they can inadvertently reflect and magnify existing biases found within their training datasets. By utilizing a specialized data set, researchers can effectively identify and scrutinize harmful stereotypes embedded within these systems. This methodology fosters an understanding that language serves not only as a communication tool but also as a vessel for perpetuating detrimental societal norms. Through careful analysis, researchers can pinpoint instances of bias which allows developers to rectify these issues—ultimately leading towards more equitable AI frameworks.

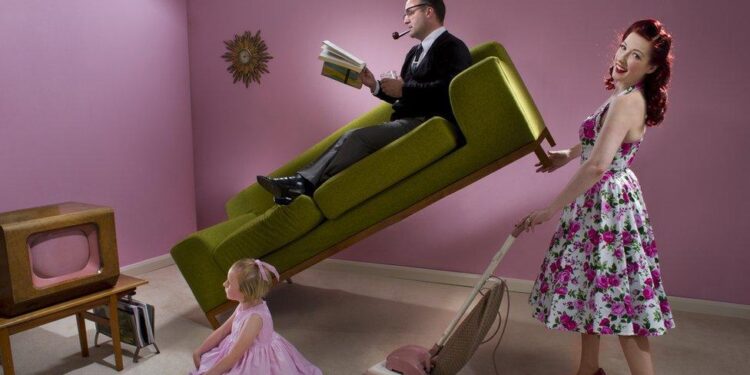

This data set acts as an invaluable instrument for dismantling stereotypes by categorizing various aspects for examination. For example, scholars can investigate areas such as gender dynamics, racial prejudices, and age-related misconceptions, each revealing intricate layers regarding how language influences perceptions. Below is an overview of some critical categories under review:

| Category | Stereotype Example | Pervasive Impact |

|---|---|---|

| Gender Dynamics | The belief that women are overly emotional. | This notion contributes to discrimination in professional settings. |

| Cultural Backgrounds | A common stereotype suggests minorities lack competence. | This hinders equal opportunities across various sectors. |

| Maturity Levels < td >Older individuals are often viewed as inflexible or resistant to change. < td >This perspective excludes valuable insights from experienced voices. |

This analytical framework not only assists in recognizing problematic expressions but also promotes corrective actions aimed at fostering inclusivity within AI systems. By harnessing this data set effectively, the tech community can strive towards minimizing biases while reshaping how machine learning interprets human language.

Equipping Researchers: Tools and Methodologies for Evaluating Language Models Outputs

The focus among researchers is intensifying on identifying harmful stereotypes propagated by large language models (LLMs). Utilizing this comprehensive new dataset allows academics to apply diverse tools and methodologies when analyzing outputs while reinforcing their findings through robust evidence-based approaches:

- < strong >Discourse Analysis:< / strong > Investigating contextual subtleties present in generated text that may either reinforce or challenge prevailing stereotypes.< / li >

- < strong >Sentiment Analysis:< / strong > Assessing emotional undertones within outputs helps reveal implicit biases.< / li >

- < strong >Statistical Evaluation:< / strong > Measuring stereotype prevalence across different model outputs aids pattern recognition.< / li >

< / ul >The importance of collaborative efforts cannot be overstated; sharing knowledge enhances collective understanding significantly through strategies such as:

- < strong >Interdisciplinary Collaboration:< / strong > Working alongside sociologists , linguists , psychologists enriches analytical perspectives .< / li >

- < strong >Open-source Platforms:< / strong > Utilizing resources like Hugging Face or TensorFlow facilitates model evaluation processes .< / li >

- < strong Community Involvement :< span style = "font-weight:bold;" class = "highlighted-text" title = "" aria-label = "" role = "" tabindex = "-1" aria-hidden ="true">Engaging stakeholders around discussions about stereotyping leads toward creating more inclusive outcomes .< span style ="font-weight:bold;" class ="highlighted-text">

Diversity Within Data Sets A broader representation ensures varied linguistic usage.< / td >/ tr < Lack Of Algorithmic Transparency Cultivates trustworthiness & accountability among users .

/ td >/ tr <Evolving Ethical Standards Paves way toward responsible utilization practices .

/ td >/ tr <“Promoting Responsible AI: Best Practices To Reduce Stereotypes In Future Models”

The ongoing evolution surrounding artificial intelligence necessitates establishing best practices designed specifically aimed at curbing stereotype propagation found throughout large-scale linguistic frameworks . One effective approach involves incorporating diverse viewpoints during training dataset development phases ; ensuring input encompasses wide-ranging voices & experiences significantly mitigates risks associated with amplifying pre-existing prejudices . Engaging communities typically underrepresented during curation processes enriches datasets while laying foundations necessary towards producing inclusive results .

Additionally , implementing algorithmic transparency cultivates environments where potential biases become identifiable & manageable proactively ; institutions should prioritize developing tools enabling stakeholders access into decision-making pathways taken by models themselves . Recommended practices include :

- < Strong Regular Audits : Monitoring output regularly identifies instances requiring assessment regarding bias detection . /li>/li < Strong Collaborations : Partnering external organizations specializing bias mitigation enhances effectiveness overall . /li>/li < Strong Public Reporting : Sharing evaluations publicly maintains accountability standards high throughout industry sector . /li>/li

Incorporating recommendations outlined above shapes future landscapes reflecting richness inherent human experience rather than narrow-minded stereotypical representations .

“Conclusion”

In conclusion , introducing groundbreaking datasets signifies pivotal advancements made toward refining comprehension surrounding large-scale linguistic frameworks utilized today . As scholars delve deeper into complexities woven intricately through languages examining subtle yet powerful manifestations stemming from entrenched social constructs emerges clearer path forward fostering equitable interactions between humans machines alike becomes tangible reality ! Equipping developers necessary tools identify mitigate harmful tendencies enhances safety inclusivity technologies usher era accountability development process itself ! Moving forward collaboration between technological innovation societal awareness paramount ensuring progress reflects diversity fabric humanity instead reinforcing outdated notions prevalent past centuries ahead challenging yet hopeful inviting all engage dialogues responsibilities shaping future artificial intelligence landscape together !